With the AWS CloudWatch support for S3 it is possible to get the size of each bucket, and the number of objects in it. Read on to see how you can use this to keep an eye on your S3 buckets to make sure your setup is running as expected. We’ve also included an open source tool for pushing S3 metrics into Graphite and an example of how it can be used.

CloudWatch

CloudWatch lets you collect pre-defined metrics for the AWS services that you use, and also lets you report and store your own custom metrics. Threshold-based alerts (“Alarms”) can be set to notify as well as to take other actions (like scaling up an ASG). You can also graph your metrics and setup dashboards.

For most people though, the CloudWatch APIs are a way to get metric data on the AWS resources that they use. It’s usually simpler to pull the “Basic Monitoring” (lower frequency and less rich) data out of AWS and feed it in to your regular monitoring infrastructure.

Here are some CloudWatch terms that you need to know:

- A Namespace corresponds to an AWS service. Typically this is simply the short name of the service prefixed with “AWS/”, as in “AWS/EC2” or “AWS/ELB”. You can find the full list here.

- A Metric is, well, a metric, like “CPUUtilization”. Note that this does not specify the source of the metric. In this case, it is left unspecified which EC2 instance’s CPU Utilization is being reported.

- For that, Dimensions are used. These are essentially key-value pairs, associated with each measurement, that identify (at least) where it came from. For e.g., when an EC2 instance reports a CPUUtilization metric, it also says that it’s InstanceId=X. Here InstanceId is a “dimension”. There can be up to 10 dimensions associated with a metric.

For a certain AWS service, like say ASG, you can find the list of metrics and dimensions in it’s documentation (like this one for ASG). You can also see the list of all services and the metrics+dimensions that they report, in this CloudWatch documentation section.

This page does a good job of detailing the CloudWatch concepts, and is a good starting point to dig deeper.

Now let’s see how we can pull metrics data out of CloudWatch.

Getting at CloudWatch Data

The “Basic Monitoring” metric data can be queried via the CloudWatch APIs without having to enable anything explicitly. From the API set, the two APIs most interesting for us are ListMetrics and GetMetricStatistics.

-

ListMetrics lists all available metrics+dimension combinations. For e.g., if you have 2 EC2 instances, each will report in 7 metrics, and ListMetrics will return 14 entries.

-

GetMetricStatistics actually returns the metric data. The way this works is that you specify a StartTime and an EndTime, also a Period. AWS cuts up the duration between the start and end times into period-sized chunks, and for each period aggregates all available data for the metric. You can pick one or more of the aggregation functions sum, min, max, average and count. The metric itself is specified with a combination of the MetricName, Dimensions and the Namespace.

Note that when using GetMetricStatistics, you have to identify the metric completely, including all the dimensions that ListMetrics reported.

Let’s have a look at these APIs using the AWS CLI tool, which wraps these APIs as cloudwatch list-metrics and cloudwatch get-metric-statistics subcommands. Here’s what list-metrics outputs:

$ aws cloudwatch list-metrics --namespace AWS/EC2

{

"Metrics": [

{

"Namespace": "AWS/EC2",

"Dimensions": [

{

"Name": "InstanceId",

"Value": "i-fe0c9270"

}

],

"MetricName": "CPUUtilization"

},

{

"Namespace": "AWS/EC2",

"Dimensions": [

{

"Name": "InstanceId",

"Value": "i-fe0c9270"

}

],

"MetricName": "DiskReadOps"

},

[..snip..]

}There were 7 metrics totally, reported by a single EC2 instance with

the instance ID i-fe0c9270. You can see how the instance ID comes out

as the value of the dimension named “InstanceId”.

Forming the arguments for the get-metric-statistics command isn’t pretty. Remember that we need to identify the metric with namespace, name and dimension, the duration with start, end times and the period and finally also the statistic function to be used for aggregation. Here is how it looks:

$ aws cloudwatch get-metric-statistics \

--namespace AWS/EC2 --metric-name CPUUtilization \

--dimensions Name=InstanceId,Value=i-fe0c9270 \

--start-time 2015-12-14T09:53 --end-time 2015-12-14T10:53 \

--period 300 --statistic Average

{

"Datapoints": [

{

"Timestamp": "2015-12-14T10:18:00Z",

"Average": 0.33399999999999996,

"Unit": "Percent"

},

{

"Timestamp": "2015-12-14T10:08:00Z",

"Average": 0.33399999999999996,

"Unit": "Percent"

},

{

"Timestamp": "2015-12-14T10:03:00Z",

"Average": 0.32799999999999996,

"Unit": "Percent"

},

{

"Timestamp": "2015-12-14T10:13:00Z",

"Average": 0.0,

"Unit": "Percent"

},

{

"Timestamp": "2015-12-14T09:53:00Z",

"Average": 100.0,

"Unit": "Percent"

},

{

"Timestamp": "2015-12-14T09:58:00Z",

"Average": 0.33799999999999997,

"Unit": "Percent"

}

],

"Label": "CPUUtilization"

}We asked for data between 9:53 and 10:53 (UTC), split up into 5 minute periods (which also happens to be the frequency for “Basic Monitoring”), aggregated as an average. AWS returned 6 datapoints, covering the time from 9:53 to 10:18 (because it was not yet 10:53 when the query was run). It also said the units for the metric was “Percent”, and wasn’t nice enough to sort the data for us. These cases are handled in the tool we describe below – read on!

S3 and CloudWatch

So what about S3, then?

The CloudWatch metrics that S3 provides can be found here. There are two of them:

-

The NumberOfObjects is the total number of objects in the bucket. The metrics is reported with the dimensions BucketName=X and StorageType=AllStorageTypes.

-

The BucketSizeBytes contains the total size in bytes of all objects in the bucket of a certain storage class. The metrics is reported with the dimensions BucketName=X and StorageType as one of StandardStorage, StandardIAStorage or ReducedRedundancyStorage.

There are also some gotchas:

- The metrics are not real-time. Tt is collected and sent to CloudWatch internally by AWS only once a day. The values are reported with Timestamp as midnight UTC of each day.

- It is not clear when the metrics is computed and stored by S3. That is, if it is now 2014-12-14T09:30, the metrics for 2014-12-14 may not be available. It should be available sometime before 2014-12-14T23:59, and when available, will be reported with the timestamp of 2014-12-14T00:00. Ugh!

- Glacier storage class metrics is not available.

Here are some ready-to-run commands you can use:

# Get the size of all objects of storage class "StandardStorage" in

# the bucket named "YOURBUCKETNAME".

$ aws cloudwatch get-metric-statistics \

--namespace AWS/S3 --metric-name BucketSizeBytes \

--dimensions Name=BucketName,Value=YOURBUCKETNAME \

Name=StorageType,Value=StandardStorage \

--start-time 2015-12-14T00:00 --end-time 2015-12-14T00:10 \

--period 60 --statistic Average

# Get the count of all objects in "YOURBUCKETNAME".

$ aws cloudwatch get-metric-statistics \

--namespace AWS/S3 --metric-name NumberOfObjects \

--dimensions Name=BucketName,Value=YOURBUCKETNAME \

Name=StorageType,Value=AllStorageTypes \

--start-time 2015-12-14T00:00 --end-time 2015-12-14T00:10 \

--period 60 --statistic AverageNotes:

- The start time and end time should include midnight. Any other time of day will not yield any data. For the values as of 14-Dec-2015, specify the start time as 2015-12-14T00:00 and end time as 2015-12-14T00:10.

- While querying the size, you need to specify a storage class. It has to be one of StandardStorage, StandardIAStorage or ReducedRedundancyStorage. If you need the total across all storage classes, you need to add it up yourself.

- There is only one datapoint, so the aggregation function can be minimum, maximum, average or sum.

A tool for accessing the S3 Metrics

It seems natural to collect these metrics each day, and hand it off to a Graphite daemon for storage. So here’s a tiny tool that we wrote which does just that. It’s written in Go and uses the official AWS Go SDK. Let’s try it:

$ go get github.com/rapidloop/s3report

$ $GOPATH/bin/s3report -h

s3report - Collects today's S3 metrics and reports them to Graphite

-1 collect yesterday's metrics rather than today's

-g graphite server

graphite server to send metrics to (default "127.0.0.1:2003")

-p prefix

prefix for graphite metrics names (default "s3.us-west-1.")

$ $GOPATH/bin/s3report

s3.us-west-1.rlbucket3.standardstorage.size 5259947 1450051200

s3.us-west-1.rlbucket3.objcount 7 1450051200

s3.us-west-1.rlbucket4.standardstorage.size 126966514 1450051200

s3.us-west-1.rlbucket4.objcount 157 1450051200

s3.us-west-1.rlbucket4.standardiastorage.size 59331764 1450051200

s3.us-west-1.rlbucket5.standardiastorage.size 64810 1450051200

s3.us-west-1.rlbucket5.objcount 171 1450051200

s3.us-west-1.rlbucket5.standardstorage.size 28893689 1450051200

s3.us-west-1.rlbucket1.objcount 182 1450051200

s3.us-west-1.rlbucket2.standardstorage.size 7352747 1450051200

s3.us-west-1.rlbucket1.standardstorage.size 5709578 1450051200

s3.us-west-1.rlbucket2.objcount 4491 1450051200

sending to graphite server at 127.0.0.1:2003:

done.When invoked, s3report will collect the size (per storage class) and count for each S3 bucket and report it to a Graphite daemon (by default at 127.0.0.1:2003). Be sure to set the usual environment variables AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and AWS_REGION before running it.

If the metrics for the day are not ready when you run it, use the “-1” flag to collect the previous day’s metrics.

s3report is simple enough to hack on (it’s a single Go source file), is MIT-licensed Open Source, so feel free to dig in and tweak it to your needs.

Using the S3 Metrics

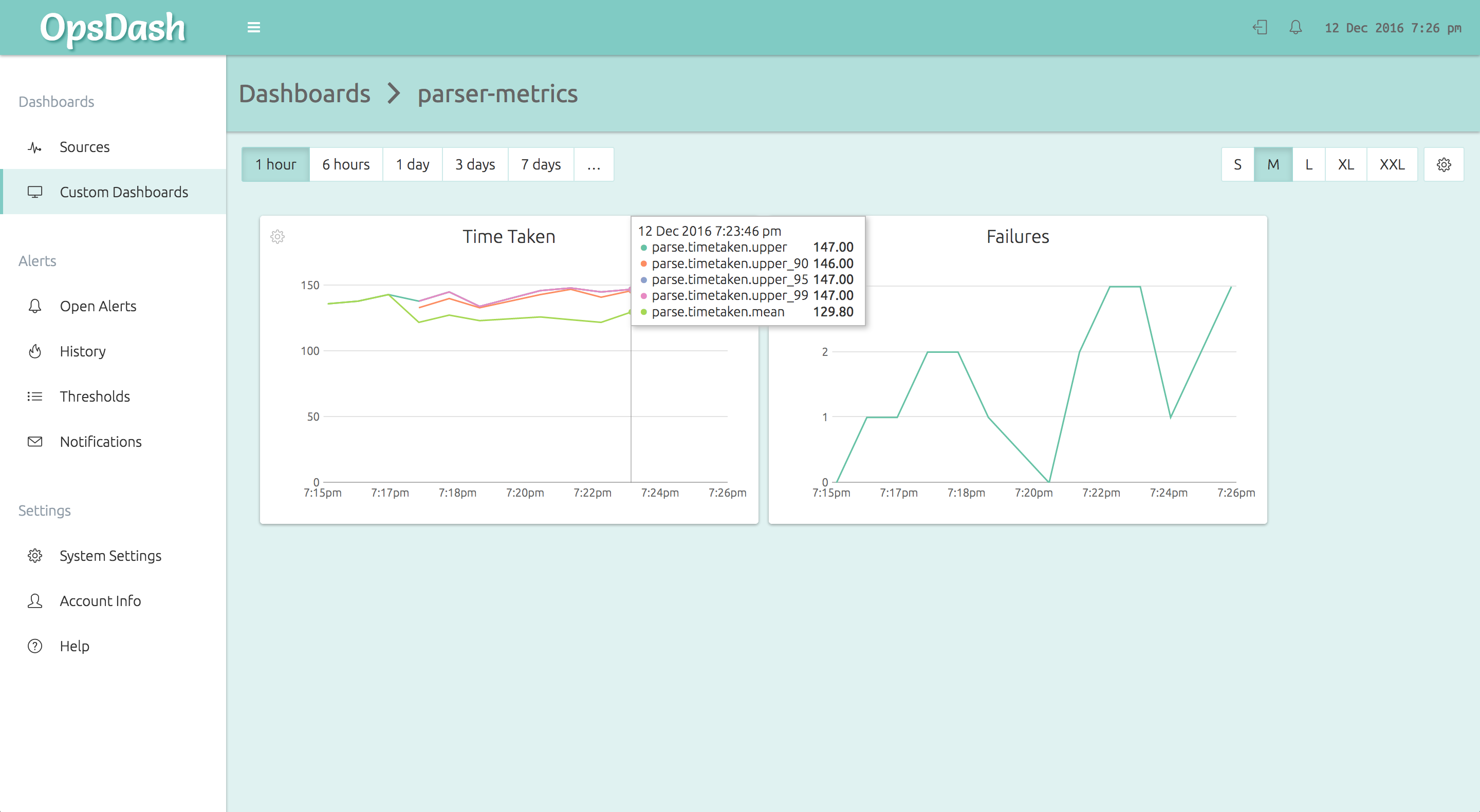

Gathering this type of information, storing, seeing and alerting on it is a common need in modern cloud infrastructure. OpsDash lets you consolidate your regular server, database and service monitoring, with custom and application metrics.

The OpsDash Smart Agent comes with built-in StatsD and Graphite interfaces. It accepts, aggregates and forwards these metrics to the central OpsDash servers, where you can visualize them on custom dashboards, set alerts and get notified.

New Here?

OpsDash is a server monitoring, service monitoring, and database monitoring solution for monitoring MySQL, PostgreSQL, MongoDB, memcache, Redis, Apache, Nginx, Elasticsearch and more. It provides intelligent, customizable dashboards and spam-free alerting via email, HipChat, Slack, PagerDuty and Webhooks. Send in your custom metrics with StatsD and Graphite interfaces built into each agent.