What does it take to keep your infrastructure bullet-proof? To be able to keep the servers humming while whole regions go down, while app developers push unreasonable code to prod, while response times rise?

Here’s our take on it, tell us what you think!

1. Understand Workload Patterns

The work that the software running on your servers are called upon to perform usually follows patterns. This may be in the form of daily peaks of web traffic, or weekends when there are more report-processing jobs, or weekly flash sales and so on.

It is essential that you’re aware of these workload patterns. Specifically, why, when and how the load peaks and varies, and to know facts like the CPU usage of your web nodes peak to 80% at 10.00 AM ET.

And why is that, you ask? Because of resource bottlenecks.

2. Identify Bottlenecked Resources

The sum total of all your running servers represent a finite amount of computational resources. While it is every business’ dream to keep this as close to the actual requirement as possible by dynamically adjusting their provisioning, the ground truth is still that resource usage across the servers is wildly non-uniform.

For example, if you have a cluster of web nodes that mostly serve up pre-computed data from Redis/memcache nodes, you can almost be sure that it’ll be the memory that they’re using most, and disk bandwidth is what they’re using least. But then, if they’re pulling in bulky data from the Redis nodes, it might be the network that is being used near capacity. Or if they’re actually images and you’re resizing them on the fly in code, it might be CPU that is running hot.

Essentially, you end up with different clusters each having different resource usage bottlenecks, and you need to know this. Why? Because of trends.

3. Spot Trends

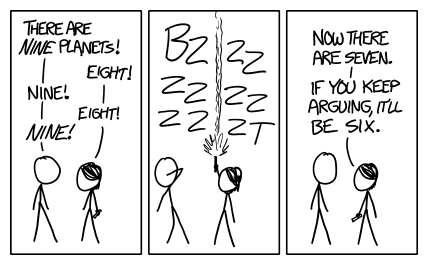

Just when you figure out all this, things change. They always do. Either new features get added to software, or you upgrade or migrate databases, or your business struck a new deal and traffic is increasing.

And also, data grows. Any and every bit of it. Data that sit in databases, in log files, in memcache nodes, in S3.

All these exhibit themselves as trends. You should be able to see the network bandwidth usage of database servers increase over time as per-user data grows. Or the average CPU usage of web nodes jump after a new release.

And that’s the crux of your job: to be able to run an adequately-provisioned, efficient backend server farm that can adjust to the changing demands of business and an ever-growing bulk of data.

Well, how?

4. Monitor, Monitor, Monitor

The first step is insight. How do you get to know your workload patterns and resource usage? And keep them in view so that you can detect trends?

Setup a monitoring system. It should be able to tell you how your clusters and servers are doing, and if they’re tending to consume more resources than you are comfortable with.

But a monitoring system is not worth much if you don’t..

5. Act on the Data

..act on the information it provides.

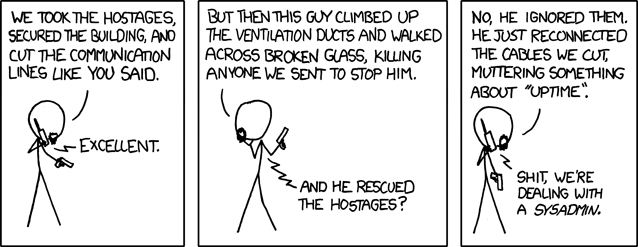

Part of the action is of course, reactionary. Like much of everything else, servers, services and apps misbehave, have mood swings and go AWOL. It’s a daily grind to make them toe the line.

The other part is what is needed to ensure a smoother near-term future for your servers and business. That is of course, to gather trends about resource usage, and to figure out where the slacks and bottlenecks are. Armed with this info, you can start tweaking your deployment.

6. Optimize, Plan and Provision

There’s always slack to be optimized. And there’s always stuff that can go wrong if we keep going like this. Now that you’ve put numbers to it, it is possible to plan for optimizations, migrations, upgrades.. The trends also tell how urgent a need is, and to forecast resource usages for an upcoming quarter or big feature release.

7. Improve Continuously

Rinse and repeat. It doesn’t end here. Go back to the monitoring step and examine if you’re really monitoring all that you need to. Or that you’re monitoring too much and your alerting signal-to-noise ratio is poor.

Maybe it’s time to set something up to make that cluster to resize on-demand? Or maybe shift to a hosted service instead of using that in-house service that everyone is afraid to touch?

Keep improving. It’s the only way to stay bullet-proof amidst constant change.